In simple terms, the definition of PCM audio files is, in essence, PCM audio record is the digital rendition of an analog wave. Its purpose is to reproduce the characteristics of the analog audio sound as close as possible to its source, referred to as high fidelity. The conversion of analog audio into digital audio happens by employing a process known as sampling. While sampling, we’ll take into account the rate at which we sample and the bit depth.

In the beginning, we will identify PCM audio. Then, we’ll discuss how we transition through the analog transition to digital. The user will also compare it against Dolby Digital and Bitstream. Let’s take a look. What is PCM Audio?

PCM Audio (Pulse code modulation) is a technique of changing audio signals from digital signals represented as waveforms into digital audio signals represented as zeros and ones, without restriction or compression of the data.

It further enables sound footage, whether that was a musical performance or a movie soundtrack, to somehow be recorded in a smaller online and offline size without sacrificing quality.

Compare the dimensions of a vinyl (audio) disc to the size of a CD to get an idea of how much space digital and analog audio take up (digital).

MP3 audio streams have fundamental characteristics that determine their fidelity to the analog signal originally used, including the sampling rate and the bit’s depth.

- The sampling rate also refers to the amount instances per second samples are taken and the bit’s depth.

- That bit depth data refers to the number of bits of information contained in each sample and determines the possible digital values each sample may have.

PCM audio (pulse code modulation) seems to be a process used to transform analog input signals symbolized by waveforms to digital audio signals symbolized by ones and zeros without compaction or information loss. PCM Audio is the standard for all consumer digital formats like Blu-Ray discs, DVDs, and CDs. Discs, etc.

The fidelity of PCM audio streams to the original analog signal is determined by these basic bit depths and the sampling rate is properties. The process of converting analog sound into digital is the base of digital audio. It reproduces sound with digital signals. Digital signals are much more straightforward to work with as they can be adjusted without compromising the sound quality. In reality, it may even be more enjoyable to listen to this sound.

The digital audio format has been a component of the production industry since the late 1970s, even despite recording experiments from the 1960s. The most common format for digital audio is PCM audio or the modulation of codes for sound.

Pulse Code Modulation (PCM audio) is a representation of the analog signal in digital form. PCM audio has become the preferred format for computers that play audio and is also utilized in audio CDs. The format for audio CDs is also known in the form of “Redbook” and is the trademark of Royal Philips Electronics, Inc., also known as Philips, and has to get their approval to be utilized. In numerical code, generally binary, PCM audio starts as analog signals, then sampled for size and later converted to a shorter size.

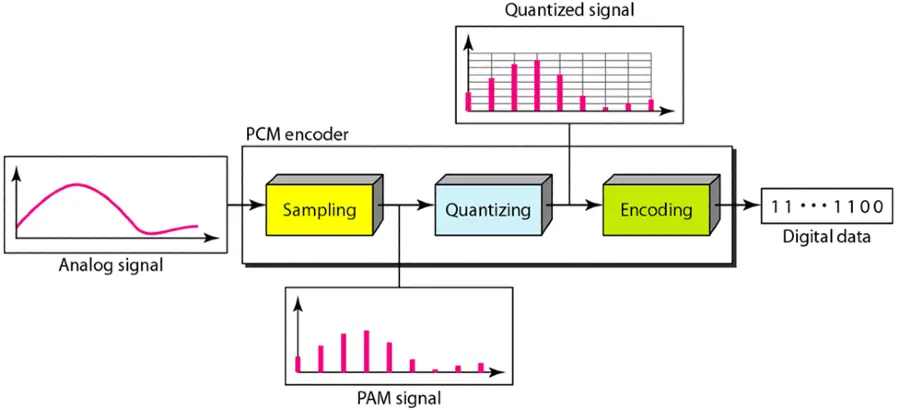

Conversion To Digital Pulse Code Modulation audio:

It cannot be easy to convert analog to digital PCM sound depending on what content you are trying to convert, the quality of the audio, and how the information will be stored, transmitted, and distributed.

A process known as sampling is used to convert analog to digital audio from PCM.

Digital audio, as mentioned, is composed of a series of ones and zeros. Analog sound travels in waves.

Sample specific points from an analog source or microphone to capture analog sound with PCM audio

Part of the process is also the amount of an analog waveform that has been sampled at a particular point (called bits). Combining more points and larger sections of the sound wave sampled at each location will reveal greater accuracy at the listener’s end.

For instance of this is an audio CD. The analog waveform is sampled 44.1kHz (or 44.1kHz) with 16 bits per dot (bit depth) for an audio CD. The digital audio frequency for CD audio is 44.1 kHz/16 bits of standard.

Pulse Code Modulation Audio and Home Cinema:

PCM audio is used on CDs, DVDs, and Blu-ray. Sometimes, the terms refer to linear audio Linear PCM or Linear Pulse Code Modulation (LPCM span>).

The digital process of PCM audio is a serial number of digits. Each of these binary codes represents the approximate amplify of the signal sample at any given instant.

A sequence of coded pulses is used to represent the message signal in Pulse Modulation. This message signal is represented in discrete form in both amplitude and time.

A method of modulation that transforms an analog signal into digital signals (also known as pulse code modulation, or simply PCM) was developed by Alec Reeves (a British engineer) in 1937. It is the most common form of digital audio found in computers, compact discs, and similar applications. The PCM stream samples the amplitude of the analog signal at regular intervals. Each sample is then quantified to the nearest value within the range of digital steps.

Linear pulse code modulation (LPCM, which stands for Linearpulse Code Modulation ) is a type of PCM Audio in which digital quantization levels are uniformly distributed. That contrasts with PCM Audio encodings, where quantization levels are dependent on the amplitude of sampled signals and the algorithms of A – Law, and Mu – Law. Although it is a generic term, PCM audio is often used to refer to linearly encoded signals like in LPCM and any other pieces of stuff.

Basic properties of PCM audio streams determine their fidelity to the original analog signal:

The sampling rate is the number of samples taken per second, and indeed the bit depth. The numeric data of bits in each sample determines the number of digital values assigned to each sample. The methodology includes sampling this same abscissa of the signal at regular intervals, quantizing the read values, and finally digitizing them (generally coded in binary form ).

PCM audio is ubiquitously used for telephony systems, but it also underpins many video standards, such as ITU-R BT.601. Because pure PCM music requires high bitrates to work, standards for consumer video such as DVD and DVR are based upon PCM audio variants that use compression. PCM audio coding is used frequently to facilitate digital transmissions of serial data.

Is Pulse Code Modulation Audio Better Than Dolby Digital?

Dolby Digital audio can be better than PCM audio, as PCM is not compressed. Dolby Digital audio is compressed. Dolby Digital takes up less space because of compression, while PCM retains greater fidelity to the source tracks.

On the other hand, Dolby TrueHD is a lossless audio file format like a zip archive and is almost identical to PCM. While there are technical distinctions between the two formats, they are virtually identical in terms of sound quality and fidelity to the source.

PCM vs. Bitstream:

To learn how to set PCM or Bitstream up in different equipment. There is no one right answer to the question of which one you should choose. It all depends on your specific needs.

Pros And Cons Of Pulse Code Modulation audio:

Pros:

The higher interference tolerance is a benefit of digital signal coding, such as PCM audio. The binary coding must distinguish between a high- and low signal (0 or 1). The receiver’s binary coding must distinguish between a high and low signal (0 and 1) but not PCM audio. Each type of modulation has a different resistance to random or systematic errors. Regeneration amplifiers can eliminate sinusoidal interfering signals such as Mains Hum, unlike other types of modulation. This technique is now used in communications technology and traditional analog technology (high-fidelity).

Cons:

PCM audio coding has the disadvantage that it requires high data transfer rates ( approx. PCM audio codes require a high rate of data transfer ( 1.4 Mbit/s on the audio CD ). Because of this, various PCM audio methods have been modified and expanded, and digital information is reduced via source coding and program code.

Modulation:

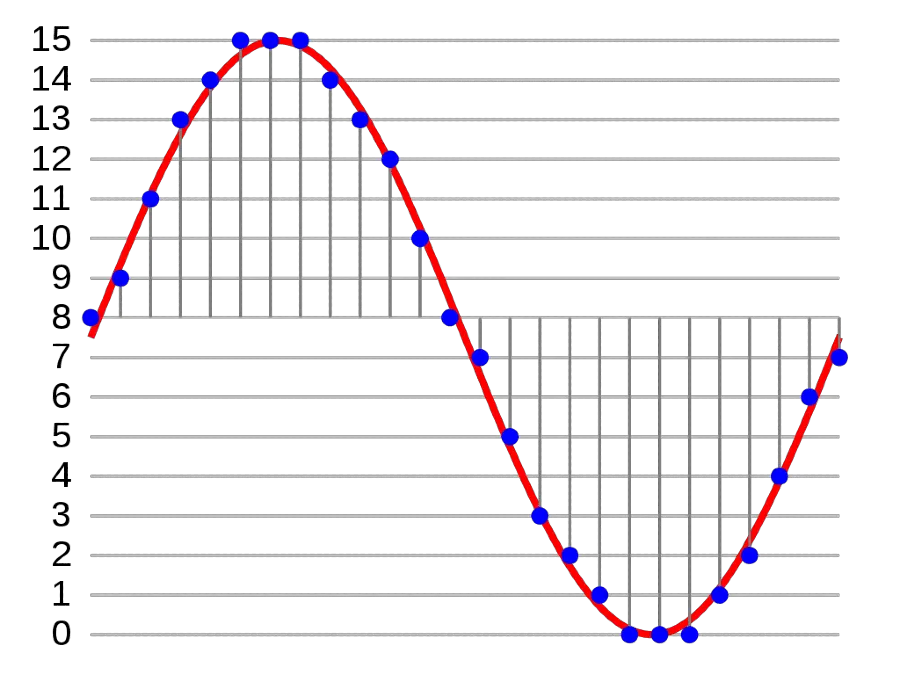

Below is a figure showing a sinewave (in red). It has been sampled and quantified using PCM Audio. Vertical gray lines show that samples are taken at regular times. A certain algorithm is used to select one of the possible values (on the “y-axis”) for each sample. That results in a discrete representation of input signal (blue dot) that can easily be encoded as digital data to allow later manipulation or storage.

It can confirm the quantized values of the sampling moments in the sine wave case as 8, 9, 11, 13, 14, 15, 15, 15, 14, and so on. The following four-bit numbers, or nibbles, could be generated by coding these binary values: 1000, 1001, 1101, 1110, and 1111, respectively.

An additional digital signal processor could process or analyze these digital values. It can combine multiple PCM audio streams into one larger data stream. That is used for multiple streams to be transmitted over a single physical link. This technique is known as time-division multiplexing or TDM. It is used extensively in modern public telephony systems.

Commonly, PCM audio is often implemented in a single integrated circuit, also known as an analog–to–digital converter (ADC).

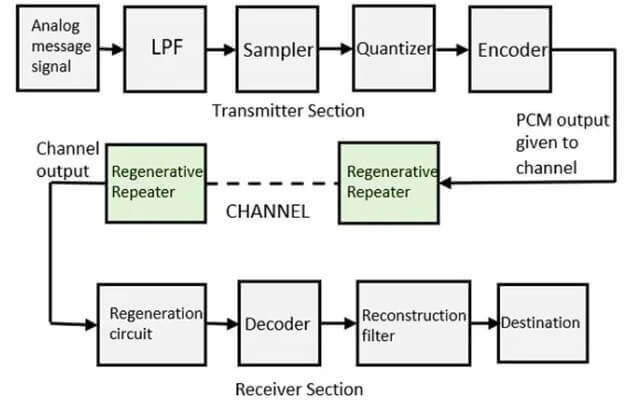

Below is an illustration of the arrangement of elements in a system that uses encoded-pulse modulation. The elements that transmit three channels of encoded pulse modulation are not represented because it is easier to understand.

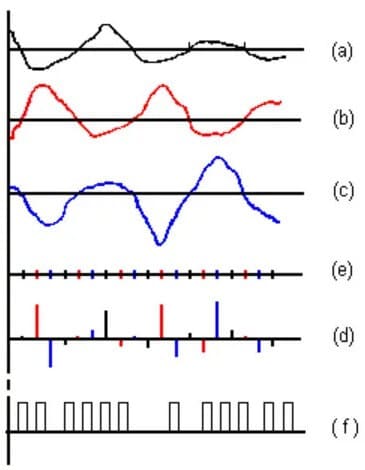

In the figure below, you can see the waveforms for different points within the system.

The description of PCM Audio audio can vary in terms of the quantification techniques. Here are some terms that are most frequently used:

- Electrical transmission pulses are referred to as pulses.

- The process of varying the characteristics of signals in order to transmit information is known as modulation.

- Demodulation is the process of producing data or output from a modulation process.

- Sampling is converting a continuous signal into a discrete signal that can be a set of values converted based on a single point in time of the whole life sphere.

Sampling:

It involves taking samples (measurements of the signal) n times per second, corresponding to n voltage levels in a second. A voice telephone channel can take 8,000 samples per second, which is one sample every 125 milliseconds. The sampling theorem says that when you take samples of an electrical signal that has a frequency twice that of the signal’s maximum frequency, these samples will contain all of the information required to reconstruct that signal.

Because the sampling frequency of 8 kHz is used in this example, it would be possible for the transmission frequency to reach 4 kHz. This frequency is sufficient to power the voice telephone channel with a frequency of 3.4 kHz.

The time it takes to separate it can use samples (125ms) for sampling other channels by using the time-division multiplexing process.

Quantization:

That is how each voltage level obtained during sampling is assigned a discrete value. The intensity of the voice can be sampled from an infinite number of telephone conversations. In a telephone channel, this range is 60 decibels. To make things easier, we choose the closest value from a set of predetermined values.

Depending on whether you use signal processing or quantization, there are numerous ways to achieve PCM audio. Quantization methods can be based on mathematical processes such as linear, adaptive, logarithmic, or linear.

Each control signal is processed following its own set of rules—a compressed process of a linear signal used in audio frequency. The sample rates differ between audio programming and CDs. Higher sampling rates are associated with greater bandwidth. Telephony employs a nonlinear signal process with limited bandwidth.

Encoding:

Encoding is the process of assigning a unique binary code to each level of quantization. The symbol (f) in the third figure denotes the shape of a wave.

It can convert an analog voice signal with a frequency of four kHz to a digital format of 64 kbps in telephony, calculated by multiplying the sampling frequency (2 4KHz) by eight bits for each sample. When using the E1 digital format for transmission, multiple voice channels can be transmitted using plesiochronous transmission, where 29 additional signals can be interleaved. So 30 channels for voice signals, one channel for signaling, and 32 x 64 kbps = 2048 kbps can be transmitted and another to synchronize ).

Demodulation:

A “demodulator” reverses the modulation process to extract the original signal from sampled data in order to recover the original signal. The demodulator determines the next value after each sampling interval is completed and then transforms output signals to that value. Thanks to the Nyquist Effect, the signal contains a significant amount of high-frequency energy due to these shifts. The Nyquist Effect is a term used to describe a phenomenon that occurs when

The demodulator sends the signal through analog filters that block out power beyond the frequency range expected to remove these unwanted frequencies and preserve only the created signal.

The sampling theorem shows that when PCM audio devices can provide a sampling rate twice the rate of the input signal, they can operate without introducing distortions into their frequency bands.

In traditional systems, they chose the quantization intervals in such a way that it must have minimized distortion to ensure that the recovered signals were identical to about there original counterparts. When people recover signals, levels that correspond to the midpoint of the quantization time in which the normalized signal is located are used, such as quantification intervals.

The circuitry involved in creating an accurate analog signal from discrete data is identical to the circuitry that generates the digital signals. This circuit is referred to as an analog-to-digital converter and is the one utilized by DAC gadgets.

Limitations Of Pulse Code Modulation Systems:

There are many possible sources of deficiencies inherent to any audio system using PCM:

- Quantization errors can occur when a discrete value is chosen that is similar but not exact at each sample’s level of analog signals.

- There is no signal measurement performed between samples. The sampling theorem guarantees unambiguous signal representation and recovery only if the signal has zero energy at half of the sampling frequency, also known as the Nyquist frequency, or greater; signals with higher frequencies are typically not correctly represented or recovered.

- Because the samples are time-dependent, a precise clock signal is required to ensure accurate reproduction.

Digitization As Part of The Pulse Code Modulation Process:

The analog signal may be processed in standard PCM audio analog signals (e.g., amplitude compression ) before digitalization. After the signal has been digitized, PCM audio signals are PCM audio stream is typically processed further (e.g., the digital compression of data ).

Certain forms of PCM audio integrate the processing of signals with the encoding process. The earlier models of the systems used processes in analog as part of the digital conversion process. The most recent versions do this using digital technology. These simple methods have been generally regarded as obsolete in comparison to modern transform-based techniques for audio compression :

- A DPCM (Differential PCM) encodes the PCM audio data as variations between actual and anticipated numbers of input signals. An algorithm determines that the following sample will be based on previous ones, and the encoder keeps the difference between the two values. In the event that the forecast is accurate, it will mean that fewer bits are used to convey the exact information. Audio, for instance, uses this method of encoding can reduce the number of bits needed per audio sample by approximately 25% compared to PCM.

- ADPCM (adaptive DPCM) is a variation of DPCM that alters the quantization step’s dimensions to reduce further the amount of bandwidth needed for an exact ratio of noise to signal.

- Delta Modulation is one type that uses PCM audio that utilizes one bit for each sample.

In telephony, the normal audio signal for one call is encoded in eighteen analog samples per second, with 8 bits per example, resulting in an audio signal of 64 kbps known as the DS0 signal. The default compression method used in the DS0 signal will be in North America and Japan; Ley PCM Audio or Ley A PCM Audio is used (In Europe and most of the other parts around the globe). These are compression systems that use logarithms that use a variety of linear 13- or 12-bit Audio samples from PCM are given an amount that is 8 bits. This method can be described within the standard G.711. they rejected another proposal to create a floating-point representation using mantissa with 5 bits and a 3-bit radix.

If the circuit’s costs are high and loss of quality is not a problem, Sometimes, it makes sense to reduce the voice volume even more. The ADPCM algorithm is employed to convert a set consisting of 8 bit PCM audio Mu-Law or A-Law samples into a sequence of ADPCM samples with a bit size of 4 bits. This way, the line’s capacity is increased by two times. This method is explained in international standards G.726.

Then it discovered that more compression was possible, and they issued those additional standards. A few International standards outline the concepts and systems protected by private patents. Therefore, the application of these standards is contingent on payments to patent owners.

Certain ADPCM techniques are employed in voice over IP communications.

Encoding For Serial Transmission:

PCM audio signals can have either return-to-zero (RZ) as well as non-returning to zero (NRZ). In order for an NRZ system to be synchronized with in-band information, there need to be no lengthy sequences of the same symbols, like zeros or ones. When it comes to the binary PCM audio systems, the density of symbol “1” is called the density.

The amount of one is typically controlled through precoding techniques such as Run Length Limited encoding. You can see that the PCM audio code gets extended to a slightly longer code that has a certain limit on the number of ones before modulation in the channel. In other situations, they can add additional frame bits into the audio stream, ensuring that symbol transitions occasionally occur.

An inverse randomization polynomial within the raw data is another method for regulating densities. That usually turns its stream into one that appears pseudo-random, but the originally transmitted data can be precisely recovered by reverse the effects. The quadratic formula is a type of polynomial. Long strokes composed mainly of zeros or ones are still possible in this scenario when first, but they are probably considered unlikely enough to fall within engineering tolerances.

In other instances, it is important to consider the long-term direct-current (DC) amount of the signal modulated can be crucial as the effects of a DC offset can cause bias to detector circuits beyond their operating limits. In this instance, specific steps are implemented to keep track of the cumulative DC displacement and then modify the codes as needed to ensure that DC displacement never reaches zero.

A large portion of code types is bipolar that are bipolar codes, meaning that the pulses may be positive, negative, or even null. In the common alternative brand inversion code, not-zero pulses change between positive and negative. It can abuse this rule to create special symbols used for plotting or for specific uses.

History of Pulse Code Modulation Audio:

Let’s look at the historical background of the PCM audio and how it changed the way we communicate and later, in a new time, how we communicate with others.

The principal motive behind signals analysis during the beginning of electronic communication was to combine signals from different telegraphic sources and then transmit them through one cable. The idea of multiplying time division (TDM) technology was first developed in 1858 by American Scientist Moses Gerrish Farmer for two signals from telegraphy and then traveling with the same pair of conductors that he requested patents. Patents were issued in 1875.

Willard M. Miner, an electrical engineer, developed an electronic switch in 1903 to allow the time-multiplexing of Telegraph signals. Miner utilized this technology to make phones. It provided clear conversations, with channels sampled at speeds of 3500 to 4300Hz however, the results were not as clear at the lower speed. TDM was a kind of TDM; however, it employed pulse width modulation instead of PCM as a sound system.

The year was 1920 in 1920. The Bartle wireline, a still image transmission technique named in honor of Maynard Leslie Deedes McFarlane and Harry Guy Bartholomew, and two British inventors utilized the telegraph system for signaling the punched character printed on papers to send images that were quantified with five gray levels. It raised the gray level to 15 levels in 1929. Patents were issued by the United Kingdom in 1921 and the United States the following year, and the United States was getting the patent in 1927.

Images could be transferred across to the Atlantic Ocean, between the United States and the United Kingdom in less than three hours. The receiver was able to decode them using printers for telegraphs that utilized the appropriate fonts.

The first time that a still image, which was thought to be digital, occurred during the year Russell Kirsch processed three photos of 176 pixels by 176 pixels of his son with his SEAC (Standards Eastern Automatic Computer) in the year 1957. NASA employed this technique and its advancements throughout the next decade to transmit images to remote sensing.

On November 30, 1926, American designer Paul M. Rainey of Western Electric was granted the patent for a facsimile-based Telegraph device that sent its signal using five bits PCM audio, encoded with an optomechanical digital converter.

They did not bring the device in production in large quantities. French Edmond Maurice Deloraine and British engineer Alec Reeves are not aware of their previous work. They came up with the concept of using PCM audio that could make voice calls in 1937 when employed by a French subsidiary of American company International Standard Electric Corporation. The patent application outlined the concept and the advantages; however, it didn’t provide any practical application.

Reeves and Deloraine applied for patents in France and in France and the United States in 1938, and the patent was granted in 1941. The first broadcast of digital voice was created by using technology known as SIGSALY encryption and encryption. It was utilized for high-level communication between allies throughout World War II, in 1943. It was 1943, and Bell Labs researchers who designed SIGSALY discovered that the idea of PCM audio had been proposed at the time by Alec Reeves.

In 1949, the Ferranti-Packard Company of Canada built the first radio system using PCM audio to transmit digital radar information over vast areas to the Canadian Navy DATAR system.

It is believed that the PCM audio system from in the 50s was encoded by cathode tubes and perforated meshes for encoding. Similar to an oscilloscope, the beam moved horizontally at a specific sampling rate. Then it controlled vertical deflection using an analog signal, which allowed the beam to travel between the low and high portions of the mesh. The mesh was able to cut from the beam, which resulted in the binary code’s current. This mesh was perforated to generate binary signals with Gray code instead of the binary system.

Alongside its use for communications, users also utilized it for communications. They also used PCM audio for music production and recording. In 1967 technicians from Japan’s Technology Research Laboratory(Japan Broadcasting Corporation) created an audio recorder for monoaural PCM. I came up with the dual-channel PCM audio recorder two years later, which recorded the audio with 32kHz with 13-bit resolution. They did that by recording the audio onto the helical scan recording device for videotape.

Between the years 1969 and 1971 Between 1969 and 1971, from 1969 to 1971 Between 1969 and 1971, 1969 and 1971, the Japanese company Denon used its NHK Stereo recorder to record experiments. The result was several of the first recordings that were commercially recorded digitally. The albums include “If there is anything” by Steve Marcus, an American jazz saxophonist. Also, “The World of Stomu Yamashta” by Stomu Yamashta, a Japanese musician and composer from Japan. They released both albums in the year 1971.

The result of these recordings led to Denon creating its PCM audio equipment based on video recorders. It features eight audio channels, which It recorded at 47.25 kHz and thirteen bits of high resolution. Initially, they designed the DN-023R model to use for recording studios in Tokyo. In 1977 Denon was the name of their company. We have reviewed many products, developed the DN034R, a smaller and smaller PCM audio recorder dubbed the DN034R, and it was a portable device that was introduced to Japanese recording studios across France, France, and to the United States to record commercially.

The British corporation BBC, which is part of the United Kingdom, also explored the use of PCM audio technology. That led to the development of an audio-based system that had 13 channels that it developed in 1972 to improve the quality of TV broadcasts. The system was used for a decade.

They were engineers from their British company that is now closed; Decca Records also developed in the late 1970s digital audio recording equipment and post-production that could be utilized internally. It was based upon the recorder that they used for the show. IVC800 is the name of the American company International Video Corporation.

The system was in use until the end of November. Polygram Records, a buyer of Decca Records, closed the “Decca Recording Center” and moved ten teams into its Dutch subsidiary, which produces digital transcriptions of archive material.

In the United States, the company Soundstream was established by the University of Utah in 1975 by Thomas G. Stockham. That was the initial firm in the nation to make the first audio recordings that were digitally recorded. The recording equipment, called the initial Digital Audio Workstation, was created with ADC as well as DAC converters as well as commercial units of magnetic tape that is used for instrumentation developed from Honeywell as well as also the DEC computer. PDP-11/60 was used for the storage of audio. To record text Command line of text was entered on the computer.

1978 in 1978 in 1978, 3M company joined in the race to create PCM audio equipment that would allow recording audio with their two-channel audio systems, which samples at 50 kHz, with 16 bits per sample. Audio recordings were recorded on tape and later digitized up to the rate of 45 inches/second. The success with the 3M system led the company to design an audio recorder that had 32 channels.

Soundstream stopped operations in the year 1983. It wasn’t able to rival the Japanese company Sony Corporation, which decreased the sampling rate by 44.1 milliseconds in 1983 to 44.1 kHz. The 3M company couldn’t compete with Sony as the technology used by these companies did not use the same technologies as the Sony video recorders that It used to store digital music, which was more practical.

It was Japan in Japan that Sony produced its first audio processor, digitally designed for use at home that was the PCM audio-1 model. That was then followed by the PCM audio-1600 model that it launched at the beginning of March 1978. It was a video recorder and U-Matic formats.

The audio recorder PCM doesn’t require tapes anymore. However, computers make use of the hard drive to store one and many channels, using devices like audio cards, high-end microphones, mixing consoles, and free and commercial software parts to record editing, mastering, and recording.

-our editorial board has reviewed this article and has been approved for publication according to our editorial policy.